Over the endless attempts to justify, contextualize, understand this project. Let’s call it art and let it remain mysterious, I’m going to continue doing it whatever happens anyways !

****

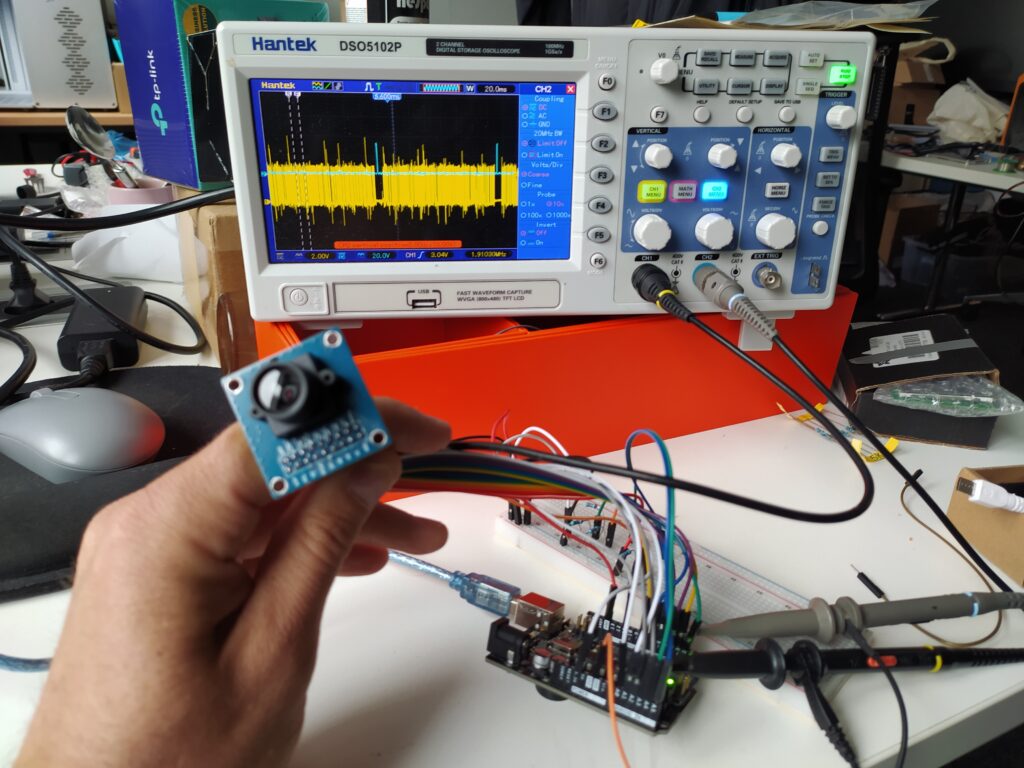

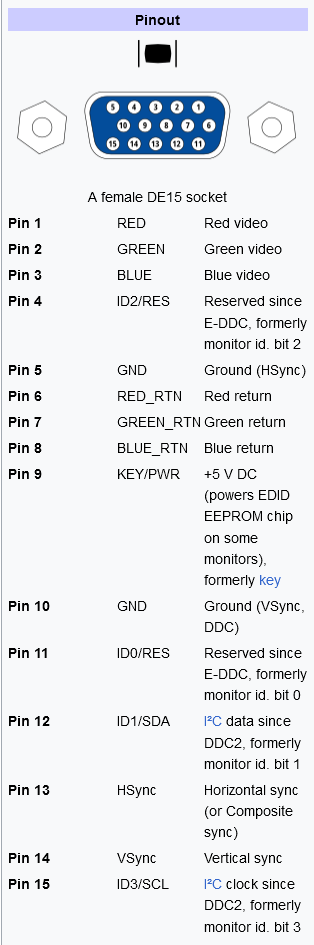

I followed this tutorial to plug in the OV7670 to the arduino :

https://circuitdigest.com/microcontroller-projects/how-to-use-ov7670-camera-module-with-arduino

EDIT here is a better version of the same code : https://github.com/ComputerNerd/ov7670-no-ram-arduino-uno

And making these adjustments :

- Make OCR2A = 2 (instead of 0) on line #535. This causes the XCLK to become about 2.667MHz (instead of 8MHz for OCR2A = 0)

- On line #590 which says writeReg(0x11, 10), change the second argument to 31, so that it becomes writeReg(0x11, 31). This, according to the datasheet, sets the PCLK prescaler to 32 (that is, the PCLK becomes 32 times slower than the XCLK).

-

I lowered the speed of the serial BAUD rate by changing this value to 7 : UBRR0L = 7;

I’ve checked the Pixel clock, on bit of the color data, HSYNC and VSYNC and they all seem normal ! I’m not sure if by default the camera was outputting this or if it really did need to be configured first by Arduino.

EDIT: This thing sends QVGA (QVGA 320×240 pixels) but at a super slow rate, like a more than a second for each image. I can’t send this directly to a big screen because it is too slow. I could send it to the FPGA and have it store the low res VGA in the SRAM and then send higher speed VGA by playing back the SRAM recording at higher speed ?

****

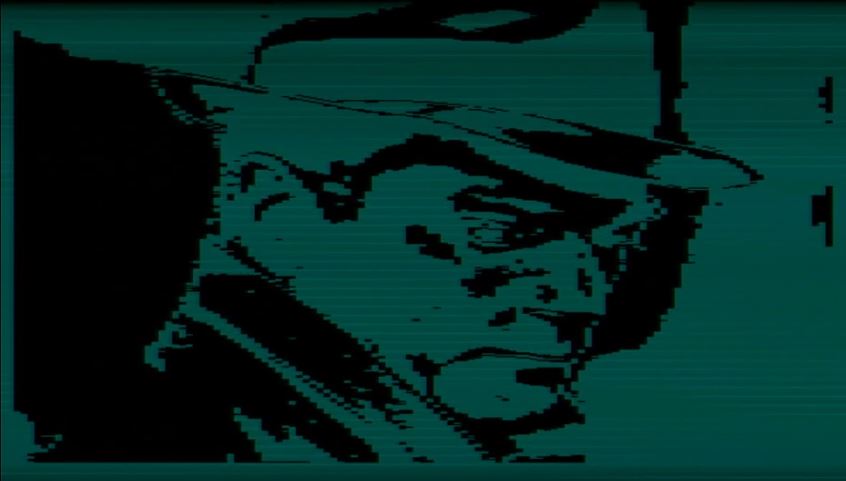

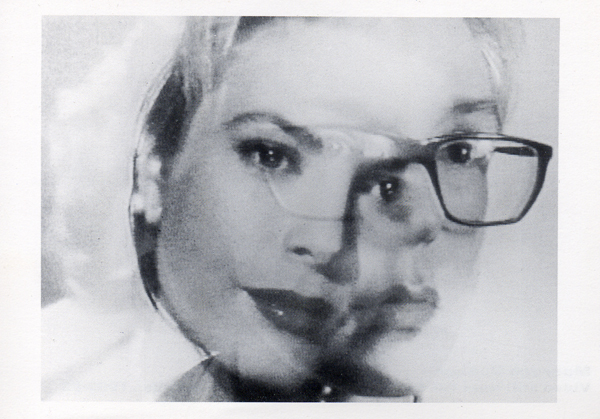

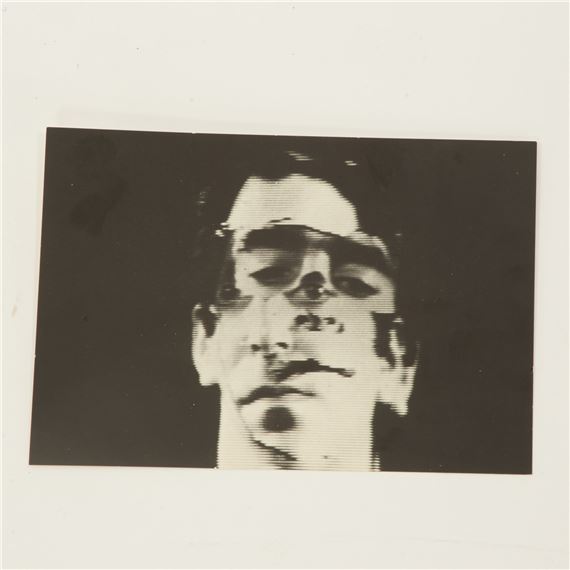

OK, I have got rpi recording working reliably 🙂 I also played with different clock divisions, recorded with rpi clock and PB with the FPGA clock, tried recording animations (failed), and tried recording two images in different parts of SRAM.

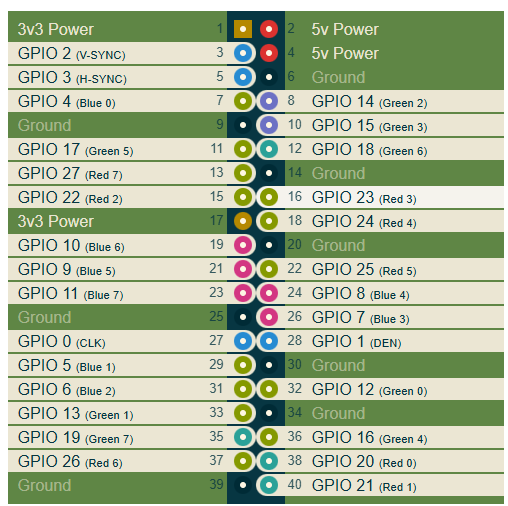

My process so far : I keep the programmer plugged in but disconnect from the USB after uploading. I reset the FPGA after programming every time (unglug and replug in USB), and deactivate and reactivate the recording device in OBS studio each time. I’m using male-female jumpers to go from the rpi to the FPGA. The rpi is powered seperately. I’m using a VGA capture and OBS studio.

Left is the original (Green 5 in DPI mode) and right is the playback (inverted) from SRAM :

Here is the code :

module verilog(

input wire rpi_DEN,

input wire rec,

input wire clk,

input wire rpi_h_sync,

input wire rpi_v_sync,

input wire rpi_color,

output reg [17:0] addr,

inout wire [7:0] io,

output wire cs,

output reg we,

output wire oe,

output wire h_sync,

output wire v_sync,

output reg [3:0] r_out,

output reg [3:0] g_out,

output reg [3:0] b_out

);

assign cs = 0;

assign oe = 0;

assign h_sync = rpi_h_sync;

assign v_sync = rpi_v_sync;

wire [7:0] data_in;

wire [7:0] data_out;

assign data_in[7:0] = (rpi_color == 1'b1) ? 8'b11111111 : 8'b00000000; // color from rpi

reg [7:0] a, b;

assign io = (rec==0) ? a : 8'bzzzzzzzz;

assign data_out = b;

reg [3:0] counter = 0; //our clock divider

//SRAM address counter

always @(posedge clk) begin

counter <= counter + 1;

if (counter[2]) begin

if(rpi_v_sync) begin // reset the SRAM each time we draw a new frame

addr <= 0;

end

else begin

addr <= addr+1;

end

end

end

always @(posedge counter[2]) begin

b <= io;

a <= data_in;

if (rec==0) begin

we <= addr[0]; //not sure why it isn't the inverse of addr[0] but that doesn't make the inverse on 'scope

end

else begin

we <= 1;

end

end

always @(posedge counter[2]) begin

if ((rec==0) && (a==8'b11111111))

begin

r_out[0] <= 1'b0;

r_out[1] <= 1'b0;

r_out[2] <= 1'b0;

b_out[0] <= 1'b0;

b_out[1] <= 1'b0;

b_out[2] <= 1'b0;

g_out[0] <= 1'b0;

g_out[1] <= 1'b0;

g_out[2] <= 1'b0;

end

else if ((rec==0) && (a==8'b00000000))

begin

r_out[0] <= 1'b1;

r_out[1] <= 1'b1;

r_out[2] <= 1'b0;

b_out[0] <= 1'b1;

b_out[1] <= 1'b1;

b_out[2] <= 1'b0;

g_out[0] <= 1'b1;

g_out[1] <= 1'b1;

g_out[2] <= 1'b0;

end

else if ((rec==1) && (b==8'b11111111)) //data_out not b ??

begin

r_out[0] <= 1'b1;

r_out[1] <= 1'b0;

r_out[2] <= 1'b1;

b_out[0] <= 1'b1;

b_out[1] <= 1'b0;

b_out[2] <= 1'b1;

g_out[0] <= 1'b1;

g_out[1] <= 1'b0;

g_out[2] <= 1'b1;

end

else

begin

r_out[0] <= 1'b1;

r_out[1] <= 1'b0;

r_out[2] <= 1'b0;

b_out[0] <= 1'b1;

b_out[1] <= 1'b0;

b_out[2] <= 1'b0;

g_out[0] <= 1'b1;

g_out[1] <= 1'b0;

g_out[2] <= 1'b0;

end

end

endmoduleAnd the constraints :

set_io clk 58

set_io rpi_h_sync 62

set_io rpi_v_sync 61

set_io rpi_color 52

set_io h_sync 76

set_io v_sync 97

set_io rec 47

set_io rpi_DEN 63

set_io r_out[0] 91 //for vga

set_io r_out[1] 95 //for vga

set_io r_out[2] 96 //for vga

set_io b_out[0] 87 //for vga

set_io b_out[1] 88 //for vga

set_io b_out[2] 90 //for vga

set_io g_out[0] 75 //for vga

set_io g_out[1] 74 //for vga

set_io g_out[2] 73 //for vga

set_io io[0] 7

set_io io[1] 8

set_io io[2] 9

set_io io[3] 10

set_io io[4] 11

set_io io[5] 12

set_io io[6] 19

set_io io[7] 22

set_io addr[0] 4

set_io addr[1] 3

set_io addr[2] 2

set_io addr[3] 1

set_io addr[4] 144

set_io addr[5] 143

set_io addr[6] 142

set_io addr[7] 141

set_io addr[8] 120

set_io addr[9] 121

set_io addr[10] 24

set_io addr[11] 122

set_io addr[12] 135

set_io addr[13] 119

set_io addr[14] 134

set_io addr[15] 116

set_io addr[16] 129

set_io addr[17] 128

set_io cs 25

set_io oe 23

set_io we 118And here are some of the documents that were helpful :

****

Some examples of video superposition/collage :

******

Check out these randomly generated 2D Turing Drawing Machines :

http://maximecb.github.io/Turing-Drawings/#

Would be so cool to do this in 3D

*****

I like this artist John Provencher’s project called [test] : https://verse.works/series/test-by-john-provencher

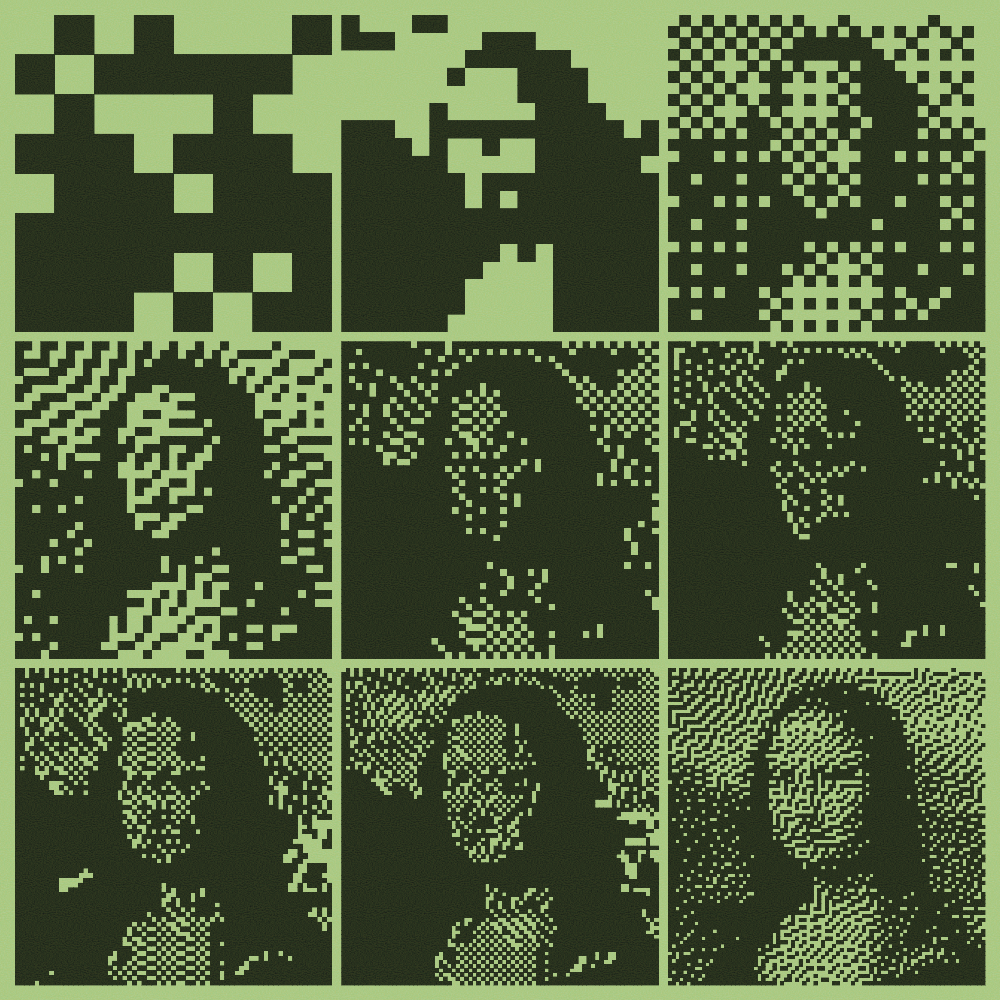

Here is the text explaining the work :

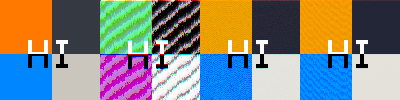

[test] is a generative script that tests various image processing/downscaling algorithms, exploring the image’s resilience to resolution and dither.

The Mona Lisa is often used for image-based algorithms [compression, noise reduction, image scaling and interpolation, edge detection, color correction, etc …] due to its detailed and recognizable features. Over time, the original painting remains in a continuous flux of digital transformations as it serves as a benchmark for these algorithms & programs. These endless variations push the material limits of the original painting… Pixels are stretched, assorted, down/up-sized, and re-arranged, making the Mona Lisa a digital motif of endless variation.

[test] is an exploration of form within the limitations of the past, where colors and resolution were limited and screens were textured, hued, faded. Even within these limitations, the generative potential of a single image is endless and the possibility for new images arise with each output.

*****

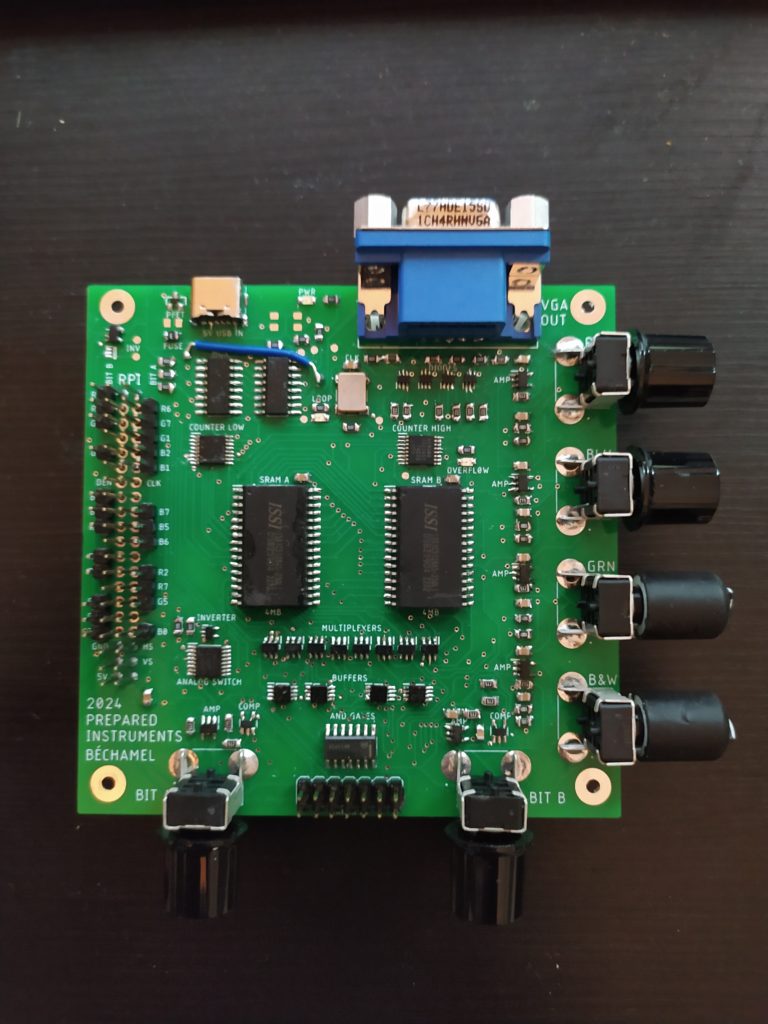

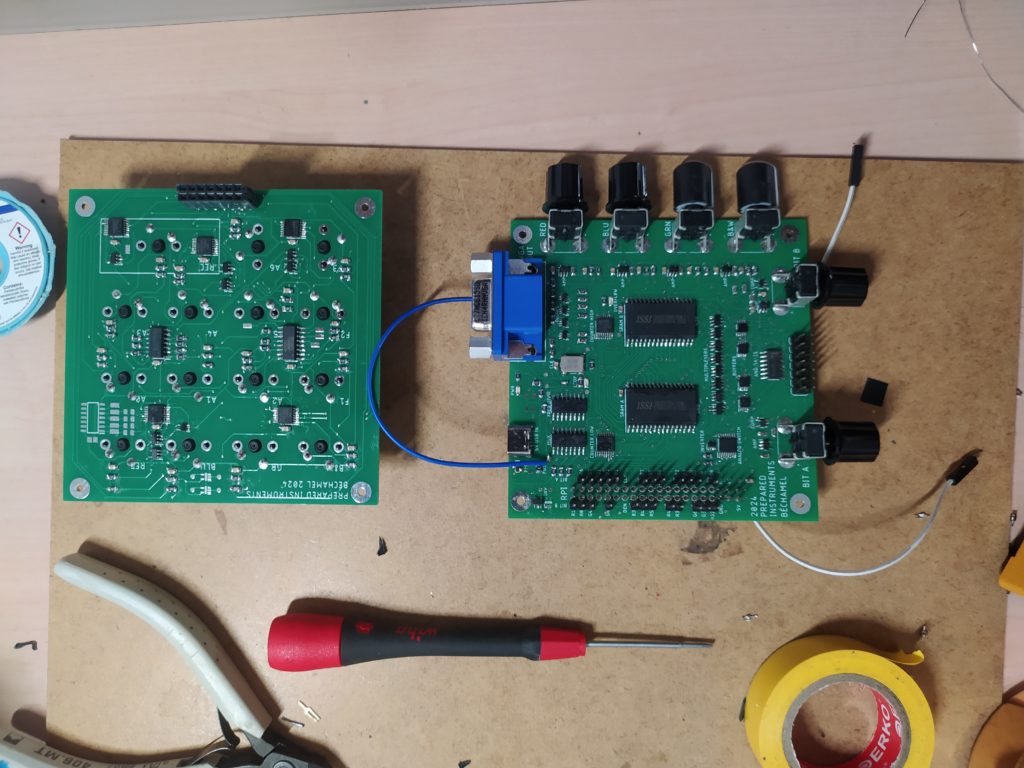

Memory :

This project has centered on electronic memory.

There were periods were the focus was on interface design, open-source project development, on learning about FPGAs, and testing different kinds of synthesizing and filtering circuits, but the project from the beginning has been about sampling in SRAM (after EEPROM, FLASH, and obsolete magnetic media experiments didn’t work).

I’ve tried different sizes of memory (8x 16MB SRAM all the way to the tiniest 512KB SRAM), different divisions of memory banks (from the FPGA addressed SRAM banks to the 8 channel single SRAM discrete logic boad), different means of address incrementing (with discrete counters to FPGA).

I’ve also experimented with different inputs (ADCs to rpi parallel bit interface) and input thresholding (comparators, fast op amps).

The remaining memory experiment is recording an image from a video input and being able to play it back without it jumping around on the screen. After this, recording several images and then doing simple boolean operations on them based on an interactive keyboard.

*****

Conflict minerals used in electronics :

Tungsten :

Tantalum :

Tin :

Gold :

****

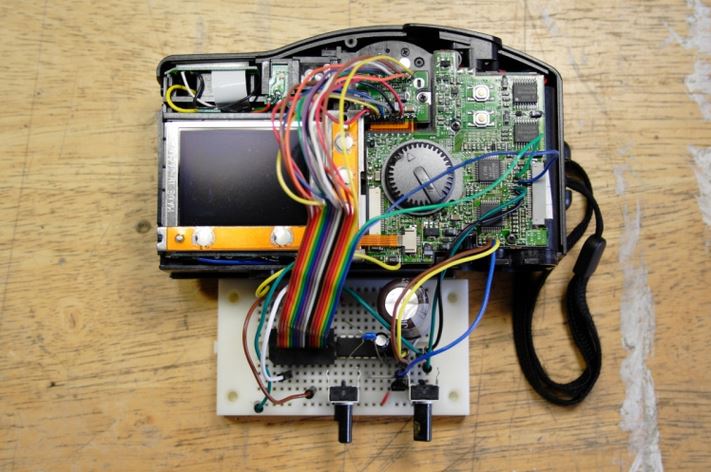

Circuit bending (instead of building circuits, modify already built ones) :

- Lick your finger and touch different components while the (non-main voltage plugged-in) machine is turned on and watch/listen for differences.

- Jump two points on the board together while it is outputting something (sound, image). If unknown connections, use & 100ohm or 1k resistor between the points.

- Use a marker on the circuit board itself to mark interesting locations.

- restart the machine if it crashes

- try powering below it’s intended voltage.

- connecting an LED between two points can have an interesting effect.

- RAM chips on samplers can be bent in many different ways with patch bays

- Take photos of the circuit and superpose information on it

Things I’d like to circuit bend :

- DSLR cameras

- webcams

- CCTV cameras

- kid’s toy cameras

- video titler and other old video equipment

- camcorders

- video game consoles

- DVD player

From https://machineproject.com/2014/workshops/digital-cameras-circuit-bending-glitch-art/

The Speak Spellbinder :

*****

A nice looking modular synth case from reddit :

***

Have been recently helping other people on their projects and I noticed some differences between how I work on projects and how some other people do :

- Their goalposts don’t move, and they have a date when they need to have the thing ready.

- They are using technology as a means to do something specific and achieve a desired effect.

This has made me consider going back to my all discrete digital component video sampler, which just needs a built-in screen and the ability to freeze frames for it to be a finished project. Moving away from FPGAs and all the “possibilities” that they create by being reconfigurable, possibilities which somehow end up expanding the project and making it impossible to finish for me. (I’ve always had a tendency to work on the hardware and then get slogged down with the software development side. It’s almost like, because the code is changeable, at some point it doesn’t feel solid and files get lost, I forget what does what, and the project unravels).

I would need to add :

- a screen so it is complete in itself and not needing of external connections to be demo’d.

- a discrete hardware VGA sync generator ? Or should I continue using the rpi sync signals ?

- a way to only record and play back when drawing from the top of the screen to avoid jumping ? This would need to be able to detect when the V sync signals the end of a frame and only allow playback and recording at a specific time (ANDing with CS on the SRAM?).

***

Some little experiments I want to try :

With the Chip Shouter, to mess with the Arduino using VGAX and the FPGA doing VGA and HDMI and see if I can create any interesting visual glitches :

With this Geometric Optics Demo we have at the Cyber Campus, to assign different bit depths of a video signal to different lasers, send the lasers around mirrors and lenses etc, then decode it with laser diodes at the other end. I wonder if I can aim at different logic blocks as they are physically placed in the FPGA ?

Good post on the subject : https://electronics.stackexchange.com/questions/359373/how-to-transmit-data-with-a-laser-beam

Laser sensor module with lens and amp : https://www.amazon.fr/Waveshare-Laser-Receiver-Sensor-Transmitter/dp/B00NJNYQ9G

It could be similar to the Optoglitch project (https://hackaday.com/2019/02/20/optoglitch-is-an-optocoupler-built-for-distortion/), but with lasers for each bit value ? :

For the HDMI bending experiment :

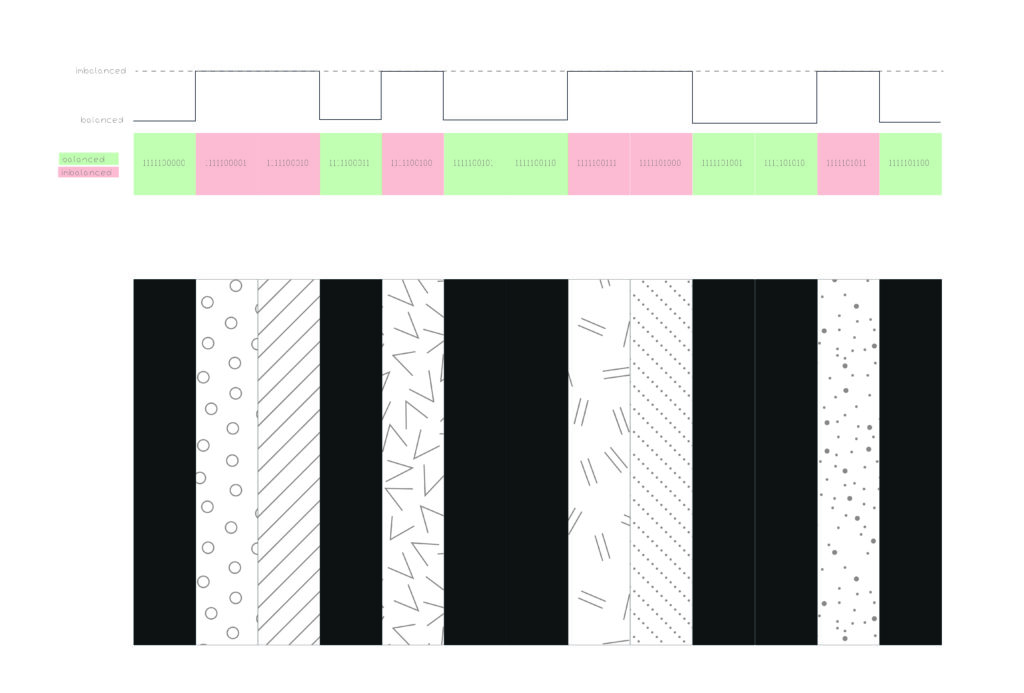

Do imbalances of different degrees create different glitches ? If so, I should map every different possible imbalanced 8 byte combination to a different pixel to explore what pattern it makes !

**********

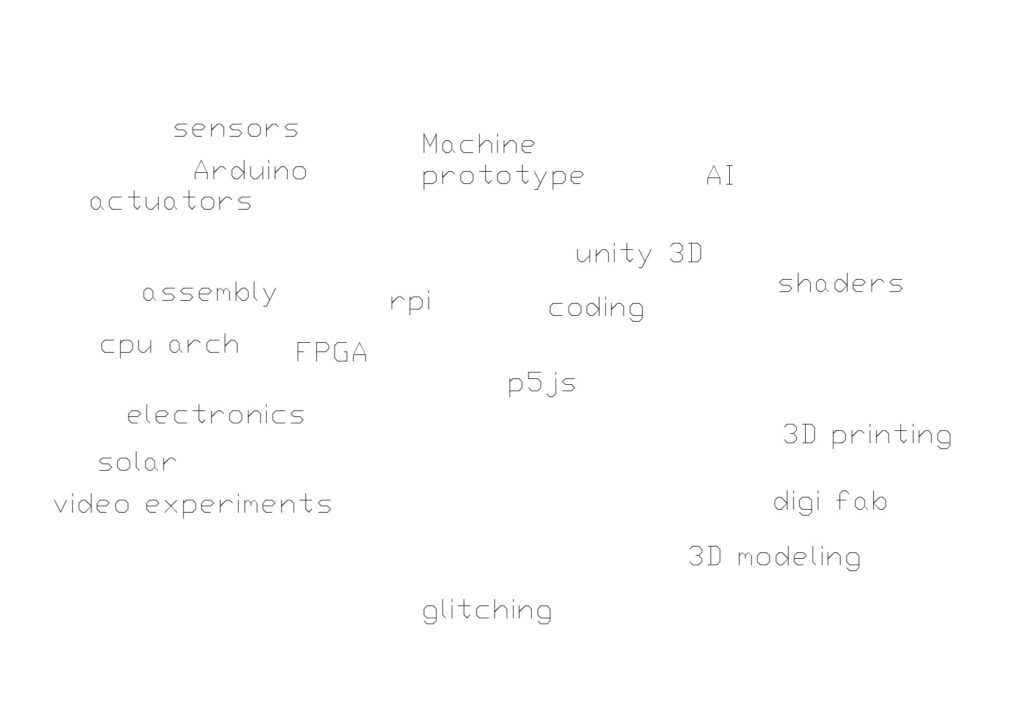

The tech workshops and classes I’ve done so far :

In terms of being more capable and employable as a teacher, I’d like to go more towards software, especially video games, renderers, shaders, and AI.

To that end, working on a simple procedurally generated cave exploring video game :

https://editor.p5js.org/merlinmarrs/sketches/pfvBYUSGB

I want to make a workshop on 2D game design, based on basic math principles and early video game hardware (so, no libraries, must be smaller than some size, uses tiny memory for sprites and fonts). Check out this under 13KB game design challenge : https://js13kgames.com/

https://www.gamemath.com/book/vectors.html

****

Just checked out the wiki on Video Synthesizers (https://en.wikipedia.org/wiki/Video_synthesizer) and Video Art (https://en.wikipedia.org/wiki/Video_art) and learned some things :

- Doing things in “Real Time” distinguishes video synths from 3D renderers. Video synthesis in analog and even digital was done before computers.

- In Chicago, there were video experiment sessions called “Electronic Visualization Events”

- “position, brightness, and color were completely interchangeable and could be used to modulate each other during …This led to various interpretations of the multi-modal synesthesia of these aspects of the image in dialogues that extended the McLuhanesque language of film criticism of the time”

- ” Today, address based distortions are more often accomplished by blitter operations moving data in the memory, rather than changes in video hardware addressing patterns. “

****

Cool article on glitches http://beza1e1.tuxen.de/lore/sparkling_tile.html

- The experts solving the problem often are faced with difficult to reproduce glitches. The solution is often to be patient, and observe unexpected correlations systematically. Also deeply understanding the functioning of the systems. Having logs is important to see what happened right before the crash.

*****

Who is my audience ? I am reading lots of posts from hacker news, am I now trying to appear to a more engineering / larger tech literate crowd on the internet ?

How do I make something that people want, how can I build empathy as a designer instead of staying at the selfish research personal project phase ?

I think I am also thinking about how all my interests in tech all boil down to illusions. It is all just man made stuff. I should embrace that it is all a construction and just make pretty things instead of searching for “truth inside the black box”.

***

From this talk ( ), code should be written in the way that that coding language is written in. You shouldn’t try to write python-like code in javascript. Verilog code should look like verilog code.

******

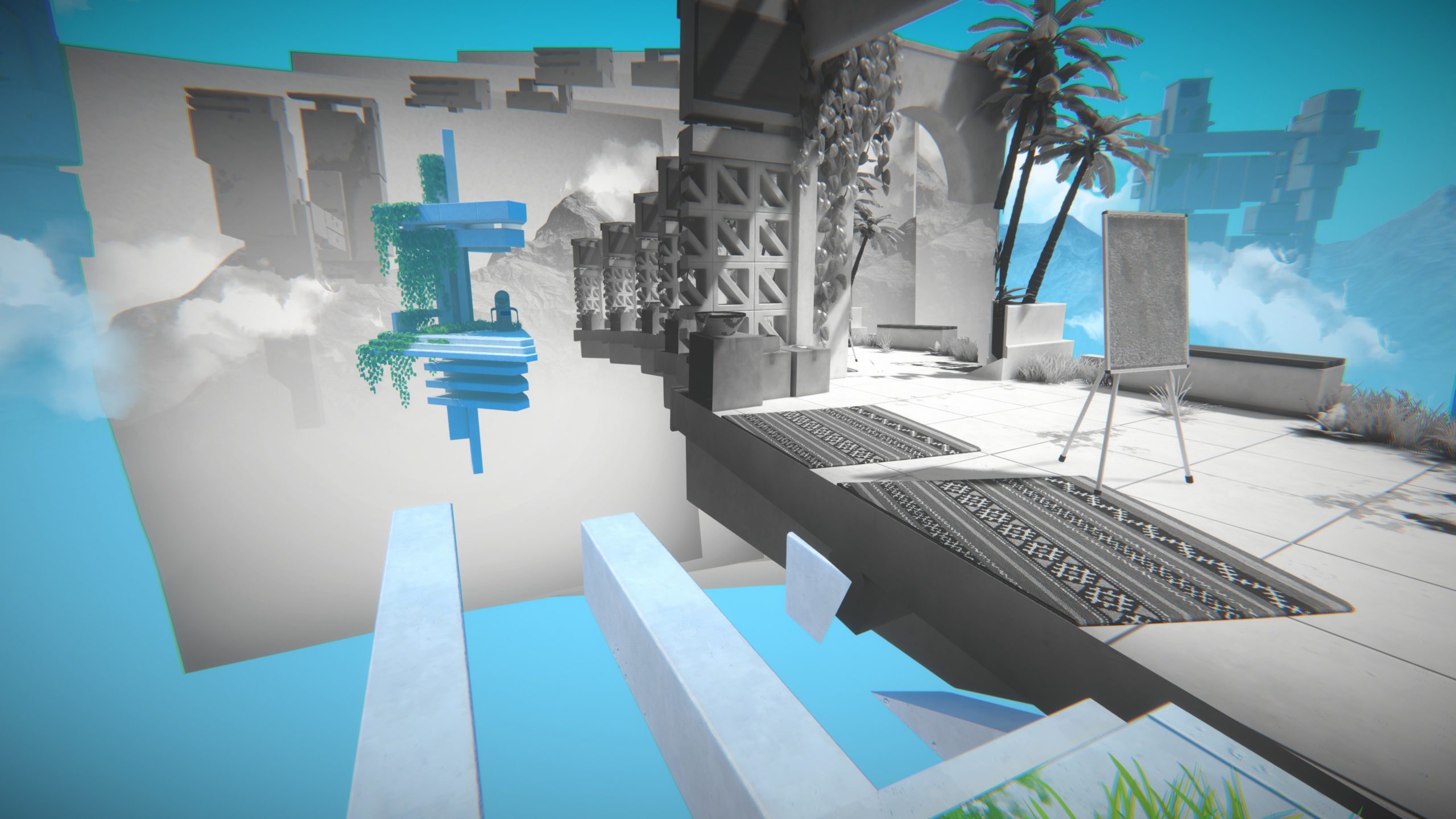

Some mindbending perspective 2D/3D video games :

Viewfinder

Superliminal

Beautiful indie games:

Sword and sorcery

World of Horror, which is made entirely in MS paint :

Papers please :

Thomas was alone (so beautiful how you can play as a pixel, and something so minimal works because of the text overlay and audio):

Pacific Drive (I love the interfaces)

Baba is you (beautiful coding game) :

Minit (love the field of vision with torch effect from Rogue) :

Rainworld :

Keep driving

Some notes :

- The attention to the players experience, and very consciously introducing them to the video game world in a step by step way.

- The importance of sound design in creating atmosphere.

- The fact that it is purely illusion creation, story telling. They let the player live a fantasy.

- There are a finite number of types of games, some include resource foraging, puzzle solving, side scrolling jump based games.

- The importance of lighting, especially glowing lights, shadows, and fog in creating atmosphere.

- 8 bit and 1 bit games are in fashion

I have been looking at indie games and their construction too. Small scale video games marry tech and art in some a unique way. Game Dev Conf (youtube.com/@Gdconf) is cool to see creators talking about their games.

On the rainworld game design (youtube.com/watch?v=sVntwsrjNe4). The artist/developer Joar Jakobsson advocates for using programming to create illusions, finding pragmatic, instead of purist, solutions. For their creatures, they have a physics + AI engine first and then a computationally expensive and more complex stylized layer is added on to that. Performance optimization concerns appear to help the creative development of the game. Another insight is that because they are working with made up creatures, no one can fault their representations (we could tell if a horse was moving funny).

On the Inside rendering (youtube.com/watch?v=RdN06E6Xn9E&t=1885s). talks about various tricks to create the illusion of fog, quickly render flashlight cones, etc. often in a struggle against 8 bit values that leave bands on the screen. Tricks seem to involve adding different kinds of noise and down/up sampling, using smooth minimum functinos for lighting to eliminate these. The connection between the rendering pipeline, and its bandwidth constraints, and the artistic atmosphere are intertwined. Interesting that this 2.5D game fixes the camera and therefore this allows the artists working on the game to know exactly what will be seen by the player. They also mention how many effects they “stole” from other game developers and are sharing theirs in an open source ethos.

A short Hike GDC talk describes how making the project short and easy to develop, keeping it improvisational, testing + sharing in process work and incorporating feedback, and having a deadline, made it all possible.

Return of Obra Dinn GDC talk describes how the creator works with an engineering “problem-solving” mentality, and that chosing a technical constraint becomes a fun problem to solve. Describes himself as OCD, and so a basic mechanical core task (often bureaucratic and ostensibly super boring) organizes the game. Inspired by games of old. Has a long term vision of game design, make something so different from other games that this is the only option, and that this won’t change any time soon. Every time he makes a game, picks a completely new style and theme. Don’t be fixated on making everything perfect, even with bugs a thing can become appreciated and a classic. Do something you would enjoy playing.

The GDC for the design of FireWatch talks about making decisions based on how the game should function based on the priorities. If something isn’t directly in line with the main priority, leave it out of the game. They create a boolean database of decisions made by the player as they move through the game that impacts future possibilities of the game space. “Your design must … survive contact with players who have a lifetime of different experiences and assumptions than you”

GDC for the FAITH horror video game has the creator explain that lox fi pixel art is perfect for the horror medium because it is based on the feeling “OMG WTF is that thing?” which is very achievable with a tiny number of pixels. Also, the importance of using black background to create an open space feeling with objects placed within this field.

GDC for 8bit art talks about Color cylcing (https://en.wikipedia.org/wiki/Color_cycling)

GDC for Baba is you talks about the importance of quick game jams in developement.

GDC on solo development (youtu.be/YaUdstkv1RE?si=psfnotyjUE3g4kGF), one speaker talks about the cone of possibilities arising from the pie chart of constraints :

This made me think about my synth project :

- I need a deadline !

- I need to set limits on what the project is and is not ! (Only taking input video and memory sampling, no sound, with mini screen though because otherwise it’s not clear what it is doing)

- I need an audience or a platform (like Steam, or Playstation games) that I’m aiming for. Perhaps this was what Marc was thinking about regarding the modular synth euro rack standard.

- It doesn’t have to be perfect, just good enough ! Several game devs said this.

- The last 10% clearly will take the most time : 90% !!

- I also need to use other people’s work (and cite it) in the form of designs and libraries.

********

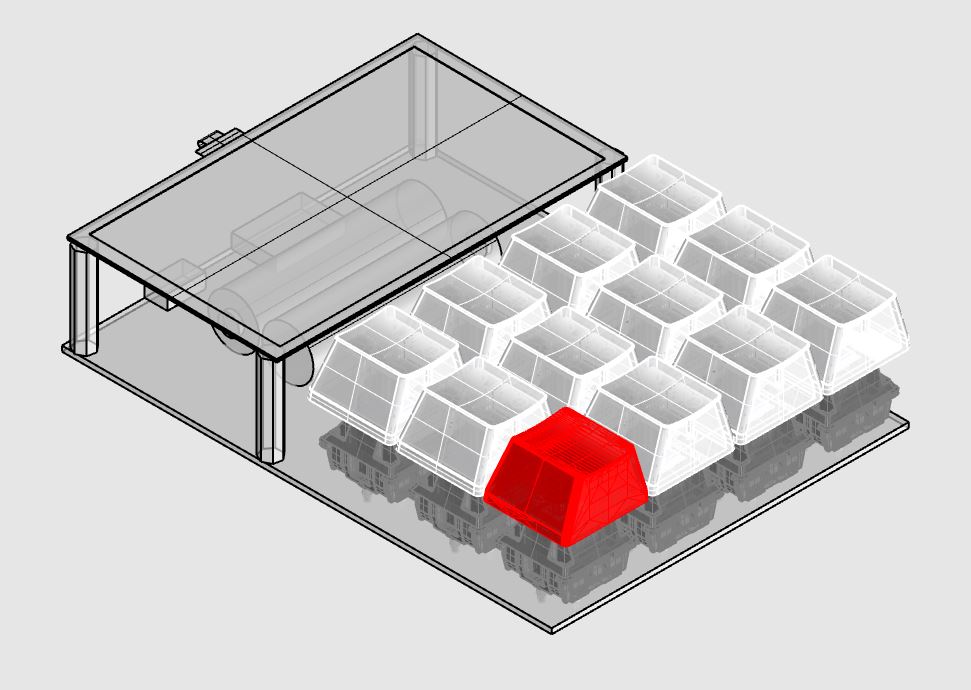

Back to a physical object : I do indeed need a small screen and a battery pack on this device for it to be legible and demo’able to the majority of people. It is not good enough that it works when plugged in to a bunch of things. To explore this avenue, I need to:

- pick up where I left off with the Gameboy style board that I didn’t really test to get the screen working.

- Get the rpi stable-screen sampling working on my current setup.

- Make a new design that incorporates everything I want but is less in the gameboy aesthetic and has more keys laid out tightly together :

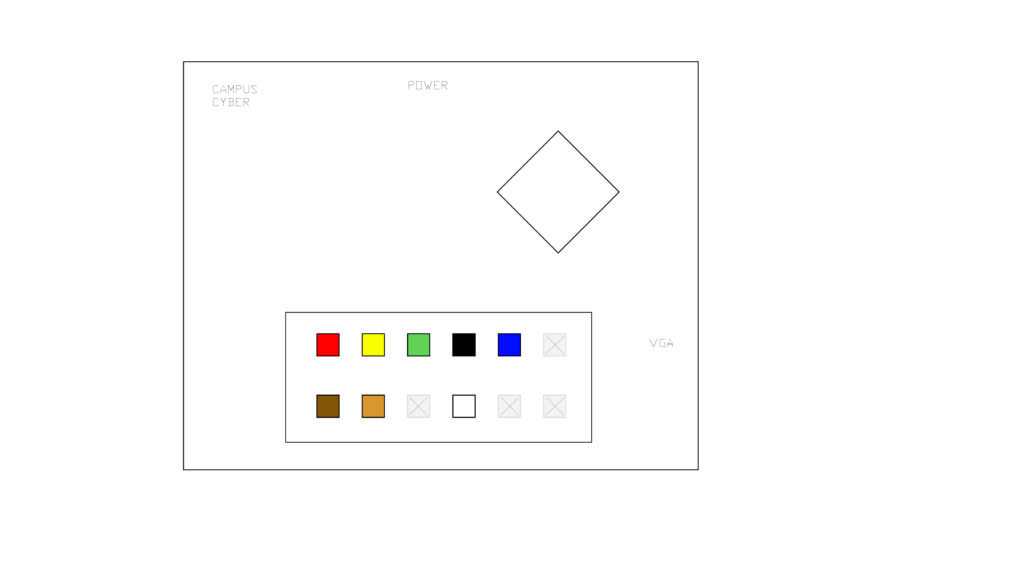

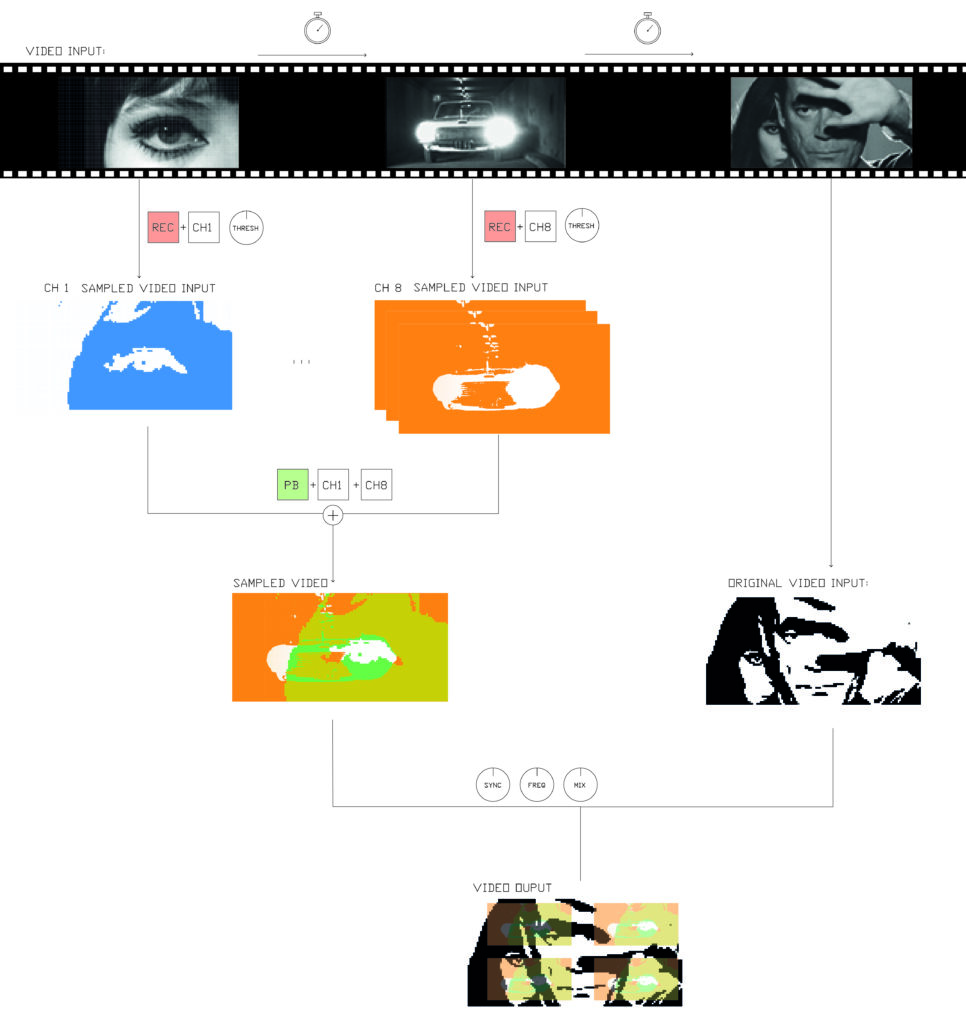

Here’s an attempt at a diagram showing the working of the sampler :

********

Stockhausen

And a great series of video by Hainbach, including some rudimentary vinyl recording devices, bar code card samplers, flanging with two synchronized tape players, and a “Stockhausen speed-run” reproducing beautiful sounds with essentially just a sine oscillator, a splicer and a tape recorder. I realized that this is exactly what I was going for with my early prototypes of the video samplers, trying to construct compositions out of simple parts, and giving one hands-on experience of a time based medium.

*****

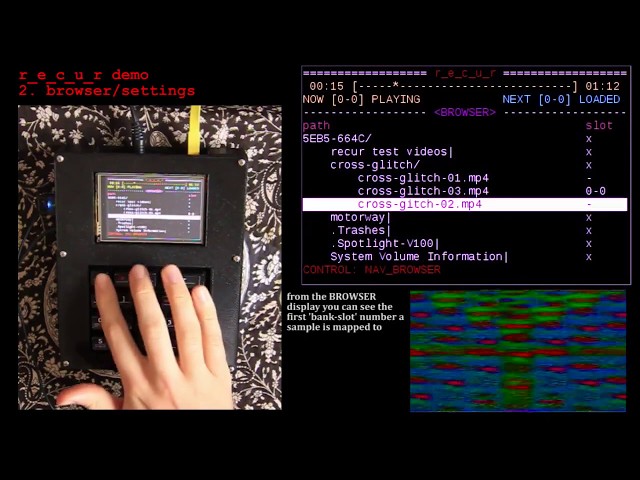

Checked out the world of hardware video samplers and discovered that most people use their laptops, so this may be an opportunity !

The OG is the Korg Kaptivator :

Snapbeat https://snapbeat.net/snapbeat-simple-lofi-hardware-sampler/

Here is an open-source one called the r_e_c_r, based on a rpi :

www.20.piksel.no/2020/11/21/r_e_c_u_r-an-open-diy-video-sampler/

And Gieskes arduino video sampler : https://gieskes.nl/visual-equipment/?file=gvs1#p3

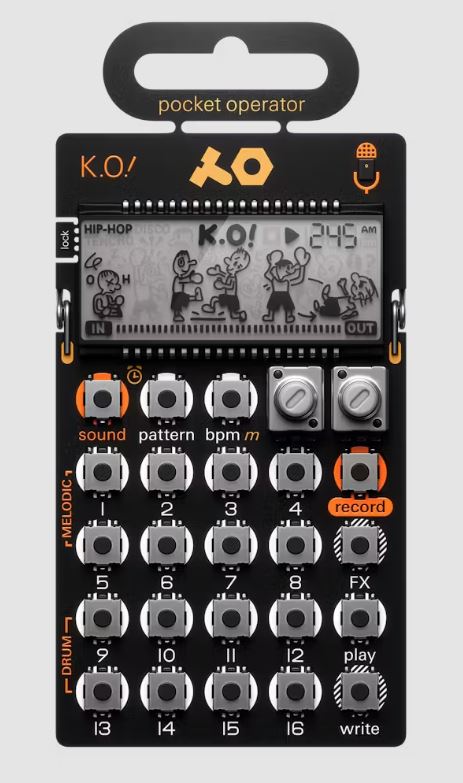

I also forgot about Teenage Engineering’s (audio) sampler :

****

I’m preparing a submission for the art track of DIS 2025, I am basically proposing slow oscillating VGA monitors. I’m referencing the experimental video art obsession with flowing water and connecting that to the idea of the “oceanic” to line up with the conference theme.

To get most of these effects I used a VCO (like part of a CD4046) and a rudimentary switched capacitor circuit taking in a second out of phase waveform.

Trying to show how visitor’s could interact with the oscillations, and could try to balance them in the middle of the screen :

Showing the circuit for generating the patterns :

****

Watched this teardown of the Formlabs 4 printer, gives an idea of what real product engineering is. There is a big gap between making a quick prototype and making a fully integrated, ready for public use, machine. There is also a big team !

youtube.com/watch?v=cbm03jtWWL0

The conversation turns around the design and manufacturing considerations, the iterations and complexity of the project. It’s a combination of electronics, optics, mechanical engineering, and integration engineering (dissipating heat through the machine, cable management). The design philosophy of making the machine user serviceable is a big constraint. Desgining for things that aren’t supposed to happen, like resin leakage, but may happen. A range of materials (aluminum, stainless steal, magnets, plastics, and manufacturing processes. There are people who work for months on a single component of the machine, with many iterations. They seemed to try to iterate in house before making expensive tooling. Sensors throughout, for motor rotation, resin level, to detect things being in place. Balancing concerns for robustness, weight, cost, stress resistance, leak protection, fire protection, air flow, heat flow, keeping things level and rigid, making everything work with the existing resin range they have. Using video recordings to observe the operation of parts. Lot of decisions to be made when faced with different design options.

****

FPGA project called Gamebub does everything in python running on an FPGA with a small screen and memory I think :

eli.lipsitz.net/posts/introducing-gamebub/

Also check out this interface for programming FPGAs via USB like they are external drives. Uses CRAM programming :

https://github.com/psychogenic/riffpga